this post was submitted on 11 Sep 2025

863 points (96.3% liked)

Technology

77084 readers

3409 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

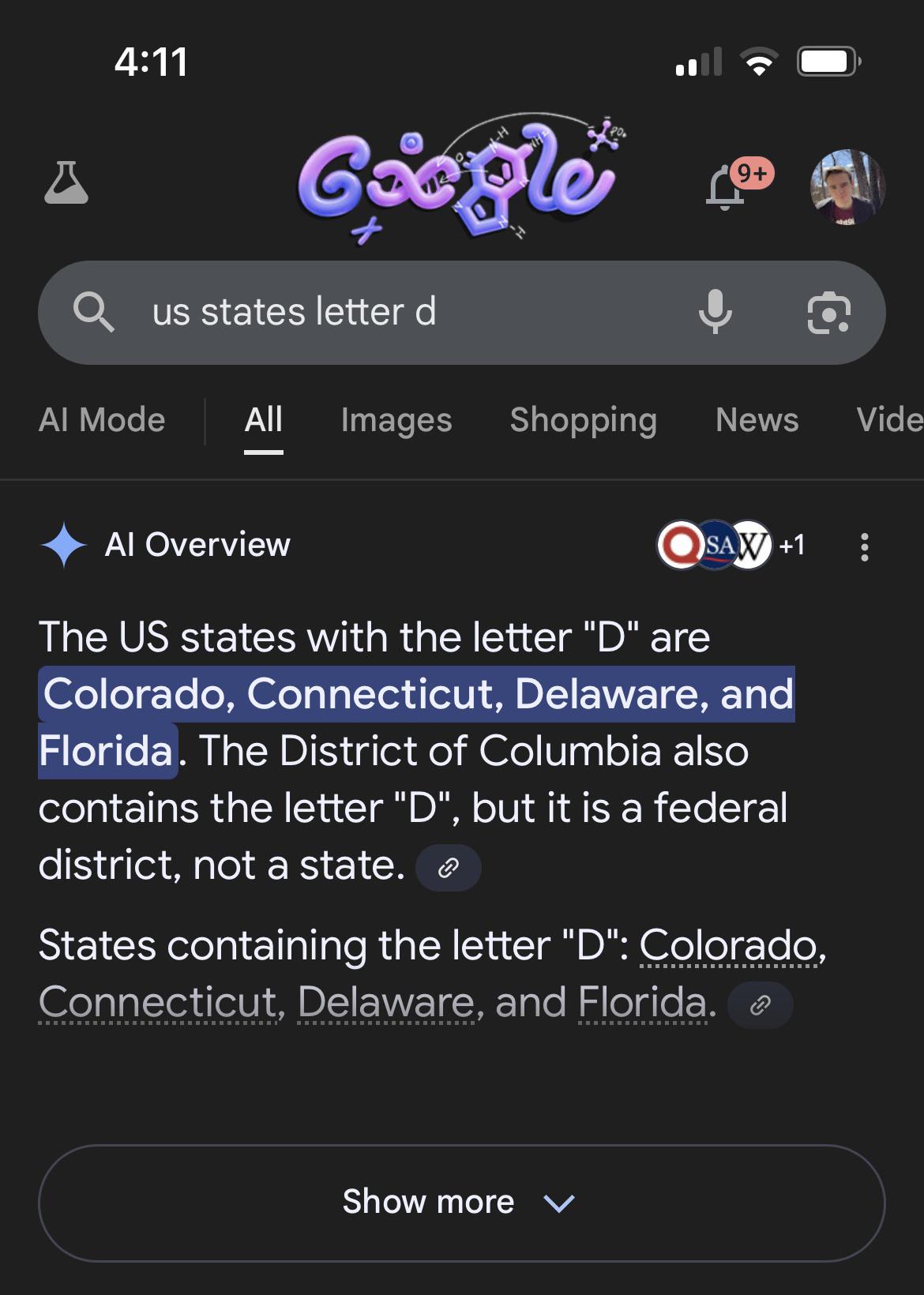

One of these days AI skeptics will grasp that spelling-based mistakes are an artifact of text tokenization, not some wild stupidity in the model. But today is not that day.

You aren't wrong about why it happens, but that's irrelevant to the end user.

The result is that it can give some hilariously incorrect responses at times, and therefore it's not a reliable means of information.

"It"? Are you conflating the low parameter model that Google uses to generate quick answers with every AI model?

Yes, Google's quick answer product is largely useless. This is because it's a cheap model. Google serves billions of searches per day and isn't going to be paying premium prices to use high parameter models.

You get what you pay for, and nobody pays for Google so their product produces the cheapest possible results and, unsurprisingly, cheap AI models are more prone to error.

Yes, it. It's not a person. Were you expecting me to call it anything else?

A calculator app is also incapable of working with letters, does that show that the calculator is not reliable?

What it shows, badly, is that LLMs offer confident answers in situations where their answers are likely wrong. But it'd be much better to show that with examples that aren't based on inherent technological limitations.

The difference is that Google decided this was a task best suited for their LLM.

If someone seeked out an LLM specifically for this question, and Google didn't market their LLM as an assistant that you can ask questions, you'd have a point.

But that's not the case, so alas, you do not have a point.

Mmh, maybe the solution than is to use the tool for what it's good, within it's limitations.

And not promise that it's omnipotent in every application and advertise/ implement it as such.

Mmmmmmmmmmh.

As long as LLMs are built into everything, it's legitimate to criticise the little stupidity of the model.