this post was submitted on 30 Nov 2025

614 points (98.6% liked)

Microblog Memes

9797 readers

1527 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

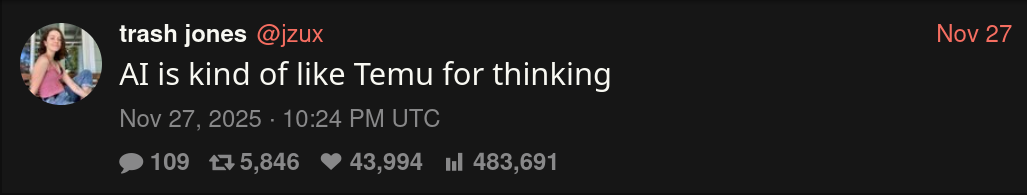

It depends on the model.

I've had some interactions where you can make it flip flop by repeatedly asking "are you sure about that?"

Or another time, I was thinking about some math thing, figured it was probably already a theorem if it was true, asked one of the GPT5 models on duck duck go, and then argued with it for longer than I should have when it gave a response that was obviouly wrong. Asked another model, it gave the correct response plus told me the name of the theorem.

So I asked the first one about that theorem, and yes, it was familiar, but it didnt apply in my case for some bs reason (my specific case was trivially reduced to exactly what the theorem was about). I did eventually get it to admit the truth, but it just wouldn't let it go for the longest time.

So it doesn't hurt to ask, but a) it might be wrong when it corrects something that was right, and b) it might argue it is right when it is wrong.

Really? lol thats sad