Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

If I had a nickel for every database I've lost because I let docker broadcast its port on 0.0.0.0 I'd have about 35¢

How though? A database in Docker generally doesn't need any exposed ports, which means no ports open in UFW either.

Nat is not security.

Keep that in mind.

It's just a crutch ipv4 has to use because it's not as powerful as the almighty ipv6

This post inspired me to try podman, after it pulled all the images it needed my Proxmox VM died, VM won’t boot cause disk is now full. It’s currently 10pm, tonight’s going to suck.

My impression from a recent crash course on Docker is that it got popular because it allows script kiddies to spin up services very fast without knowing how they work.

OWASP was like "you can follow these thirty steps to make Docker secure, or just run Podman instead." https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html

My impression from a recent crash course on Docker is that it got popular because it allows script kiddies to spin up services very fast without knowing how they work.

That's only a side effect. It mainly got popular because it is very easy for developers to ship a single image that just works instead of packaging for various different operating systems with users reporting issues that cannot be reproduced.

I dont really understand the problem with that?

Everyone is a script kiddy outside of their specific domain.

I may know loads about python but nothing about database management or proxies or Linux. If docker can abstract a lot of the complexities away and present a unified way you configure and manage them, where's the bad?

That is definitely one of the crowds but there are also people like me that just are sick and tired of dealing with python, node, ruby depends. The install process for services has only continued to become increasingly more convoluted over the years. And then you show me an option where I can literally just slap down a compose.yml and hit "docker compose up - d" and be done? Fuck yeah I'm using that

No it's popular because it allows people/companies to run things without needing to deal with updates and dependencies manually

This only happens if you essentially tell docker "I want this app to listen on 0.0.0.0:80"

If you don't do that, then it doesn't punch a hole through UFW either.

You're forgetting the part where they had an option to disable this fuckery, and then proceeded to move it twice - exposing containers to everyone by default.

I had to clean up compromised services twice because of it.

This is why I install on bare metal, baby!

For all the raving about podman, it's dumb too. I've seen multiple container networks stupidly route traffic across each other when they shouldn't. Yay services kept running, but it defeats the purpose. Networking should be so hard that it doesn't work unless it is configured correctly.

Or maybe it should be easy to configure correctly?

That's asking a lot, these days.

instructions unclear, now its hard to use and to configure

rootless podman and sockets ❤️

I mean if you're hosting anything publicly, you really should have a dedicated firewall

Somehow I think that's on ufw not docker. A firewall shouldn't depend on applications playing by their rules.

ufw just manages iptables rules, if docker overrides those it's on them IMO

Feels weird that an application is allowed to override iptables though. I get that when it's installed with root everything's off the table, but still....

Not really.

Both docker and ufw edit iptables rules.

If you instruct docker to expose a port, it will do so.

If you instruct ufw to block a port, it will only do so if you haven't explicitly exposed that port in docker.

Its a common gotcha but it's not really a shortcoming of docker.

Docker spesifically creates rules for itself which are by default open to everyone. UFW (and underlying eftables/iptables) just does as it's told by the system root (via docker). I can't really blame the system when it does what it's told to do and it's been administrators job to manage that in a reasonable way since forever.

And (not related to linux or docker in any way) there's still big commercial software which highly paid consultants install and the very first thing they do is to turn the firewall off....

On windows (coughing)

We use Firewalld integration with Docker instead due to issues with UFW. Didn't face any major issues with it.

I also ended up using firewalld and it mostly worked, although I first had to change some zone configs.

Ok

So, confession time.

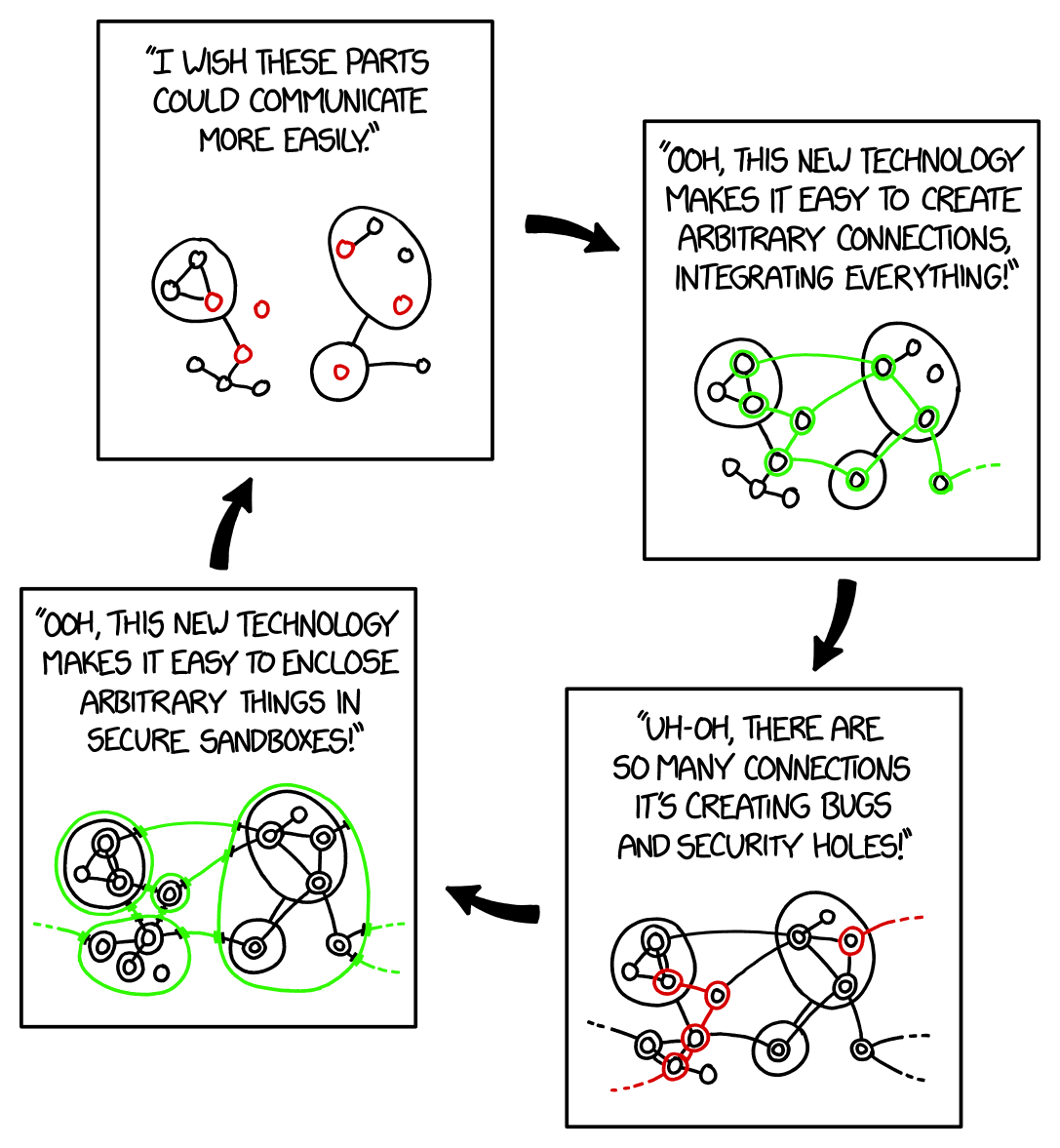

I don't understand docker at all. Everyone at work says "but it makes things so easy." But it doesnt make things easy. It puts everything in a box, executes things in a box, and you have to pull other images to use in your images, and it's all spaghetti in the end anyway.

If I can build an Angular app the same on my Linux machine and my windows PC, and everything works identically on either, and The only thing I really have to make sure of is that the deployment environment has node and the angular CLI installed, how is that not simpler than everything you need to do to set up a goddamn container?

This is less of an issue with JS, but say you're developing this C++ application. It relies on several dynamically linked libraries. So to run it, you need to install all of these libraries and make sure the versions are compatible and don't cause weird issues that didn't happen with the versions on the dev's machine. These libraries aren't available in your distro's package manager (only as RPM) so you will have to clone them from git and install all of them manually. This quickly turns into hassle, and it's much easier to just prepare one image and ship it, knowing the entire enviroment is the same as when it was tested.

However, the primary reason I use it is because I want to isolate software from the host system. It prevents clutter and allows me to just put all the data in designated structured folders. It also isolates the services when they get infected with malware.

have to make sure of is that the deployment environment has node and the angular CLI installed

I have spent so many fucking hours trying to coordinate the correct Node version to a given OS version, fucked around with all sorts of Node management tools, ran into so many glibc compat problems, and regularly found myself blowing away the packages cache before Yarn fixed their shit and even then there's still a serious problem a few times a year.

No. Fuck no, you can pry Docker out of my cold dead hands, I'm not wasting literal man-weeks of time every year on that shit again.

(Sorry, that was an aggressive response and none of it was actually aimed at you, I just fucking hate managing Node.js manually at scale.)

Sure but thats an angular app, and you already know how to manage its environment.

People self host all sorts of things, with dozens of services in their home server.

They dont need to know how to manage the environment for these services because docker "makes everything so easy".

I've been playing with systemd-nspawn for my containers recently, and I've been enjoying it!