Which explains why C-suites push it so hard for everyone

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

AI, in this case, refers to LLMs, GPT technology, and anything listed as "AI" meant to increase market valuations.

Well, they have the one job that actually can be replaced by “AI” (though in most cases it'd be more beneficial to just eliminate it altogether).

Which is acting like they know everything about everyone else's jobs, while making up wholly inaccurate assumptions

That's also why the billionaires love it so much:

they very rarely have much if any technical expertise, but imagine that they just have to throw enough money at AI and it'll make them look like the geniuses they already see themselves as.

Which ironically means that they are the easiest people to replace with AI.

... They just... get to own them.

For some reason.

billionaires love it

They think it knows everything because they know nothing.

That and it talks to them like every jellyfish yes man that they interact with.

Which subsequently seems to be why so many regular ass people like it, because it talks to them like they’re a billionaire genius who might accidentally drop some money while it’s blowing smoke up their ass.

I literally have to give my local LLM a bit of a custom prompt to get it to stop being so overly praising of me and the things that I say.

Its annoying, it reads as patronizing to me.

Sure, everyonce in a while I feel like I do come up with an actually neat or interesting idea... but if you went by the default of most LLMs, they basically act like they're a teenager in a toxic, codependent relationship with you.

They are insanely sycophantic, reassure you that all your dumbest ideas and most mundane observations are like, groundbreaking intellectual achievements, all your ridiculous and nonsensical and inconsequential worries and troubles are the most serious and profound experiences that have ever happened in the history of the universe.

Oh, and they're also absurdly suggestible about most things, unless you tell them not to be.

... they're fluffers.

They appeal to anyone's innate narcissism, and amplify it into ego mania.

Ironically, you could maybe say that they're programming people to be NPCs, and the template they are programming to be, is 'Main Character Syndrome'.

AI has been excellent at teaching me to program in new languages. It knows everything about all languages - except the ones I'm already familiar with. It's terrible at those.

and all the things we aren’t experts in, we’re unqualified to be the evaluators of the AI’s output

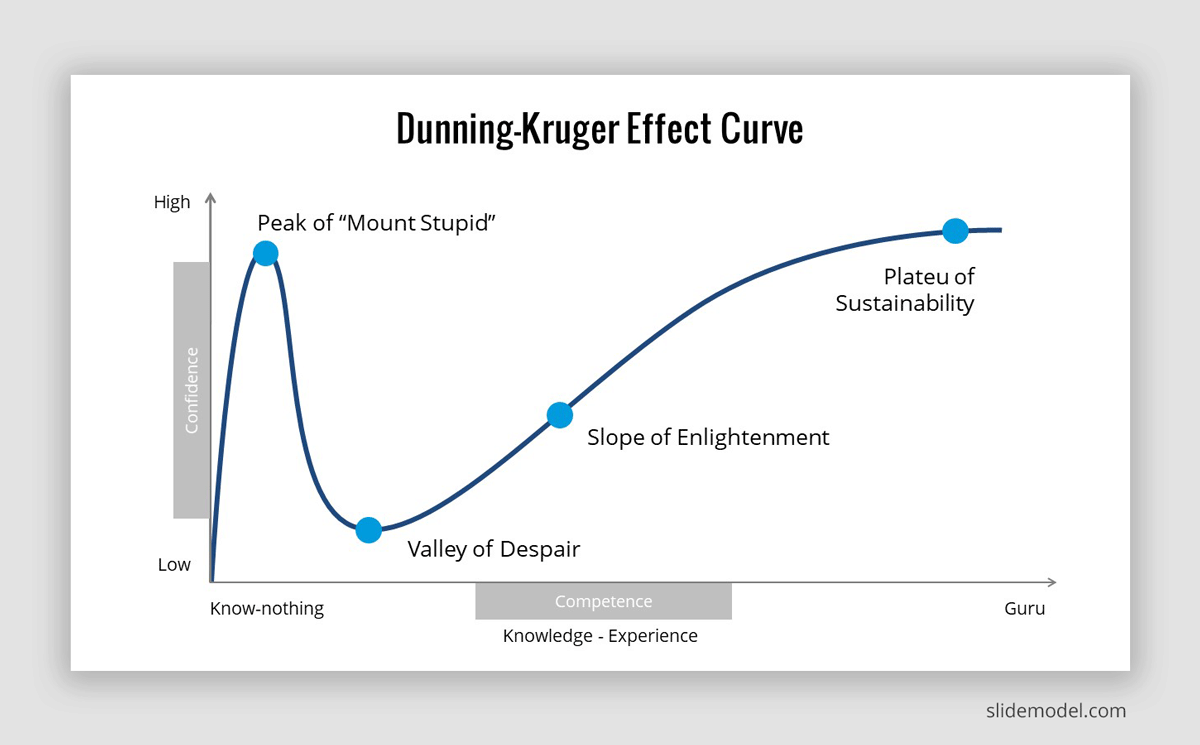

Yup, that's exactly the Dunning-Kruger mechanism at work.

And that's exactly why ai is not as useful as people think. if you don't know something, you can't evaluate the correctness of the output, and if you know something, why bother using ai?

This is why leadership loves it. They don't know shit about fuck.

Ignorance and lack of respect for other fields of study, I'd say. Generative ai is the perfect tool for narcisists because it has the potential to lock them in a world where only their expertise matters and only their oppinion is validated.

I was just thinking it's like an offshoot of this effect. It also explains all the tech bros who are all-in on AI as they're experts in nothing.

IDK about that I'm a professional slop maker and I think it could replace me easily.

Funnily enough, all my engineering professors seem to encourage the use of genAI for anything as long as it’s “not doing the learning for you”

What’s funny is that there’s basically no practical use for GenAI in engineering in the first place. Images like technical drawings need to be precise and code written for FDM/FEA etc. needs to be validated by some kind of mathematical model you derived yourself.

They say “it’s a useful new tool” and when I ask “what is it useful for” they typically have no answer besides “writing grant proposals” lol

There are lots of useful applications for machine learning in engineering, but very few if any practical applications for genAI.

“writing grant proposals”

I mean thats a time suck for most researchers.

It's why managers fucking love GenAI.

My personal take is that GenAI is ok for personal entertainment and for things that are ultimately meaningless. Making wallpapers for your phone, maps for your RPG campaign, personal RP, that sort of thing.

'I'll just use it for meaningless stuff that nobody was going to get paid for either way' is at the surface-level a reasonable attitude; personal songs generated for friends as in-jokes, artwork for home labels, birthday parties, and your examples.. All fair because nobody was gonna pay for it anyway, so no harm to makers.

But I don't personally use them for any of those things myself though, some of my reasons: I figure it's just investor-subsidized CPU cycles burning power somewhere (environmental), and ultimately that use-case won't be a business model that makes any money (propping the bubble), it dulls and avoids my own art-making skills which I think everyone should work on (personal development atrophy), building reliance on proprietary platforms... so I'd rather just not, and hopefully see the whole AI techbro bubble crash sooner than later.

This actually relates, in a weird but interesting way, to how people get broken out of conspiracy theories.

One very common theme that's reported by people who get themselves out of a conspiracy theory is that their breaking point is when the conspiracy asserts a fact that they know - based on real expertise of their own - to be false. So, like, you get a flat-earther who is a photography expert and their breaking point is when a bunch of the evidence relies on things about photography that they know aren't true. Or you get some MAGA person who hits their breaking point over the tariffs because they work in import/export and they actually know a bunch of stuff about how tariffs work.

Basically, whenever you're trying to disabuse people of false notions, the best way to start is always the same; figure out what they know (in the sense of things that they actually have true, well founded, factual knowledge of) and work from there. People enjoy misinformation when it affirms their beliefs and builds up their ego. But when misinformation runs counter to their own expertise, they are forced to either accept that they are not actually an expert, or reject the misinformation, and generally they'll reject the misinformation, because accepting they're not an expert means giving up on a huge part of their identity and their self-esteem.

It's also not always strictly necessary for the expertise to actually be well founded. This is why the Epstein files are such a huge danger to the Trump admin. A huge portion of MAGA spent the last decade basically becoming "experts" in "the evil pedophile conspiracy that has taken over the government", and they cannot figure out how to reconcile their "expertise" with Trump and his admin constantly backpedalling on releasing the files. Basically they've got a tiny piece of the truth - there really is a conspiracy of powerful elite pedophiles out there, they're just not hanging out in non-existent pizza parlour basements and dosing on adrenochrone - and they've built a massive fiction around that, but that piece of the truth is still enough to conflict with the false reality that Trump wants them to buy into.

let's not confuse LLMs, AI, and automation.

AI flies planes when the pilots are unconscious.

automation does menial repetitive tasks.

LLMs support fascism and destroy economies, ecologies, and societies.

I'd even go a step further and say your last point is about generative LLMs, since text classification and sentiment analysis are also pretty benign.

It's tricky because we're having a social conversation about something that's been mislabeled, and the label has been misused dozens of times as well.

It's like trying to talk about knife safety when you only have the word "pointy".

So Gen AI is like Dan Brown, the more you know about the subject the more it sucks

AI only seems good when you don't know enough about any given topic to notice that it is wrong 70% of the time.

This is concerning when CEOs and other people in charge seem to think it is good at everything, as this means they don't know a god damn thing about fuck all.

I agree with this. I tell people to ask it questions about things they know about. Then, when they see how many errors it makes, ask them why they assume it's any better on a topic they don't know about.

You see the same effect in journalism. News stories seem pretty authoritative until you read one about a subject you know.

AI is very useful for our business. (/s) We get paid by the hour and our billing hours have exploded simply by being hired to clean up after "vibe coder's" shit.

We deal with massive amounts of data and AI can't write optimized code for shit. Hunting down system slowdowns thanks to bad code is a constant gig with our clients.

The breadth of knowledge demonstrated by Al gives a false impression of its depth.

Generalists can be really good at getting stuff done. They can quickly identify the experts needed when it's beyond thier scope. Unfortunately over confident generalists tend not to get the experts in to help.

they’re both wrong, and they’re both right

an AI can create concept art for a writer to better visualise their world to generate ideas in a pinch, but it shouldn’t ever be what you use to show anyone else: you still need real concept art

an AI can also create writing for their art so that they can flesh out a back story to make their visual art more detailed, but it’s not going to write anything that you’d want anyone to read as a book or act in for a movie

both things can be used for the described purpose, and both things are inadequate for quality output

we’ve had this juxtaposition for a while: “redneck X”… they’re scrapped together barely functional versions of the thing you’re trying to do, on the cheap, with home-made tools. you wouldn’t sell it, but it’s kinda fine for this 1 situation with many many asterisks

professionals often don’t like when someone can hack together something functional because they know the many many places where that thing falls down when you talk about long-term, and the general case… but sometimes a hack job solves a specific problem in a specific situation for a moment for cheap and that’s all you need

(just don’t try it with electricity or your health: the consequences of not understanding this complexity is death… of course ;p)

an AI can create concept art for a writer to better visualise their world to generate ideas in a pinch, but it shouldn’t ever be what you use to show anyone else: you still need real concept art

so... if it's only for creating visuals for yourself and not for showing anyone else... I already have a perfectly fine imagination that I've been using for that purpose for as long as I can remember. Maybe it's useful for these people who I've been told have no imagination, but to me, it seems awfully redundant.

there's professors of comics?

It's an industry worth over $2bn, it'd be odd if there weren't people studying it.

I just focus on the parts of what I do know that AI can help me with, not try to say AI can replace other people, but not me. That's some dumb shit.

Orrr...hear me out, this is gonna sound wild... Or we don't believe that this debate is even one we need to have until we have actual fucking AI, which machine learning slop IS NOT. And seeing the kind of morons hyping "AI", chances are, mankind will never develop true AI because the funding goes to the morons screaming loudest, instead of actual experts slash scientists.

I'm a programmer I think both are an art and can't be replicated by ai well. Sure you get an acceptable pic, you may get something written well (okay stretching more here I haven't read anything by ai that make me think that but it's been minimal so giving some leeway), but human art is its own quality.

Just reminded me of a bit of the Dune novels, people were putting rocks out to be sandblasted by a dust storm and selling as art. I guess I agree with Duncan on that.

Wait art needs the emotion bit, huh probably to mean more than generated stuff. Another realization but good to understand. I do think the human component is necessary... Until it isn't but not today.

The only good AI I've come across is the one I use for denoising cycles renders in Blender3D, as that's something that a human cannot reasonably do.

That's the only scenario something like AI has any use as a "tool"; doing things humans cannot reasonably do.